If you haven’t been under a rock, or maybe even if you have, you must have heard of artificial intelligence—the term “AI” is everywhere nowadays. And whether or not you’re aware, your foreseeable future is likely attached to it, for better or for worse.

So what is AI, exactly? Is it all that good or that scary?

The short answer is both. Depending on who you talk to, you might get the sense of excitement or apprehension.

Personally, I have no skin in the current AI game, not knowingly so anyway, and somewhat more on the “apprehension” team. This post will explain all that in simple terms, at my level of natural intelligence.

Just to be clear, I’m talking about the actual development of AI, not “AI” as a marketing ploy used across various products.

What is Artificial Intelligence?

To understand artificial intelligence, we first need to know what intelligence is.

If you look it up, intelligence is defined as the ability to acquire and apply knowledge to adapt. Its connotation becomes complex the deeper you dig, but ultimately, intelligence is a tool required for the survival of the entity that processes it.

The more intelligent that entity is, the longer and better it can survive in the form it wants to be, which is the key—a rock has no intelligence and can last forever.

Speaking of lasting, which implies time, and since life is a series of consecutive decisions, I’d say, at the gist of it: Intelligence is the ability to predict the future and all that implies.

Note: We’re all stuck at a single moment in time, called “present”, and have to wait to see how thing pans out. So, every happening is, in a sense, a prediction, including those so apparent that we think we “know”. By “prediction,” I don’t necessarily mean fortune-telling or prophecies, but include figuring out an outcome for a specific problem or situation, small or large.

In this simplified definition, intelligence means:

- Knowing which event will happen because it’s inevitable, given current or past events. Or:

- Forming or even controlling future events using skills and resources. Or:

- The combination of both in various degrees.

The higher the accuracy, the farther forward, the more (and more complex) the events you can predict or manage, the more intelligent you are.

For example, everyone can predict that if you throw an apple into the air, it will eventually fall back to the ground. However, the accuracy of its predicted rise and landing depends on the predictor’s intelligence. On this front, so far, the best person for the job was Isaac Newton.

So, as you might have predicted by this point, intelligence is first and foremost highly nuanced. It’s so much so that most colorful daily adjectives of the English language can be used to describe different levels of intelligence or the lack thereof among us mortals.

Next, intelligence is a fluctuating progress. Generally, we become more intelligent, as individuals or as a species, over time through accumulated knowledge and practice.

Finally, there’s one thing in common among all intelligent creatures: a sense of awareness and purpose. In humans, we often call it the consciousness and conscience or, more poetically, the soul. After all, this soul is the “form” of the survival notion mentioned above.

And that brings us to Artificial Intelligence. It’s “artificial” because no consciousness is required. So, in my opinion, in a sentence: AI is a tool that predicts the future on demand.

We’d hope we’d do a good job of demanding, more on that below. Other than that, AI is itself also highly nuanced and a work in progress that improves over time. Most importantly, aside from the “artificial” notion, the more AI grows, the less we have any idea what it is, including those who work at making it happen.

So, how does AI grow?

Artificial Intelligence: Traditional vs. Generative

Artificial Intelligence is not new. It started with the first computer back in the 70s, when “machine learning” began as a new scientific field. In fact, you can call almost all computer software an AI to an extent. The calculator is definitely a piece of AI machinery for math.

Speaking of calculating, technically, like all computers, AI only deals with numbers. The way it works is that we input a bunch of numbers (or computer codes), and the AI spits out another bunch as the output based on the algorithm it’s programmed with and the information it’s accumulated to “educate” itself.

However, all information (data, content, photos, text, videos, etc.) can be converted to and from numbers. In computing, this process is generally called digitalization.

Nowadays, AI typically uses a large language model (LLM), allowing humans to communicate with it in natural language while digitalization takes place behind the scenes. It doesn’t mean AI “understands” natural language the way we humans do.

The truth is that we still don’t know, and likely never will, what is lost in translation (during the digitalization) in terms of human-related nuances, and how things are really manipulated within the digitalized world, or the AI “box”. In short, the more sophisticated AI becomes, the more likely we are to lose touch with what it actually does to produce its output.

Still, the point remains: AI is basically a computer (or computers), big or small, that performs many calculations to make specific tasks more efficient. With that, let’s start with traditional AI, which, again, has been available and improving for over half a century. This type of AI is also more under our control since it’s “simple”.

Traditional AI: Optimal output from existing data

Traditionally, artificial intelligence improves the efficiency of specific tasks by leveraging previous human inputs. Here are a couple of examples of the first traditional AIs:

- The cruise control: An automation feature that can automatically drive a car to a certain extent, pre-level 2 automation to be specific, which has been available since the late 1950s.

- Spell checking: A convenient way to keep your text typo-free that has always been available in Microsoft Word (first released in the early 1980s).

We’ll revisit these two examples below, but it’s clear that, over the years, they have improved significantly and are now far more capable than they used to be, thanks to advances in AI.

In my opinion, the most “shocking” exhibit of traditional AI happened in 1997 when IBM’s Deep Blue chess-playing machine beat the human chess grandmaster Garry Kasparov. Since then, AI has become so good at chess that, in a human-only tournament, a player could be suspected of using AI help when winning with a novelty strategy.

Fast forward to today, here are a few examples of AI in action, so common that we often don’t even notice:

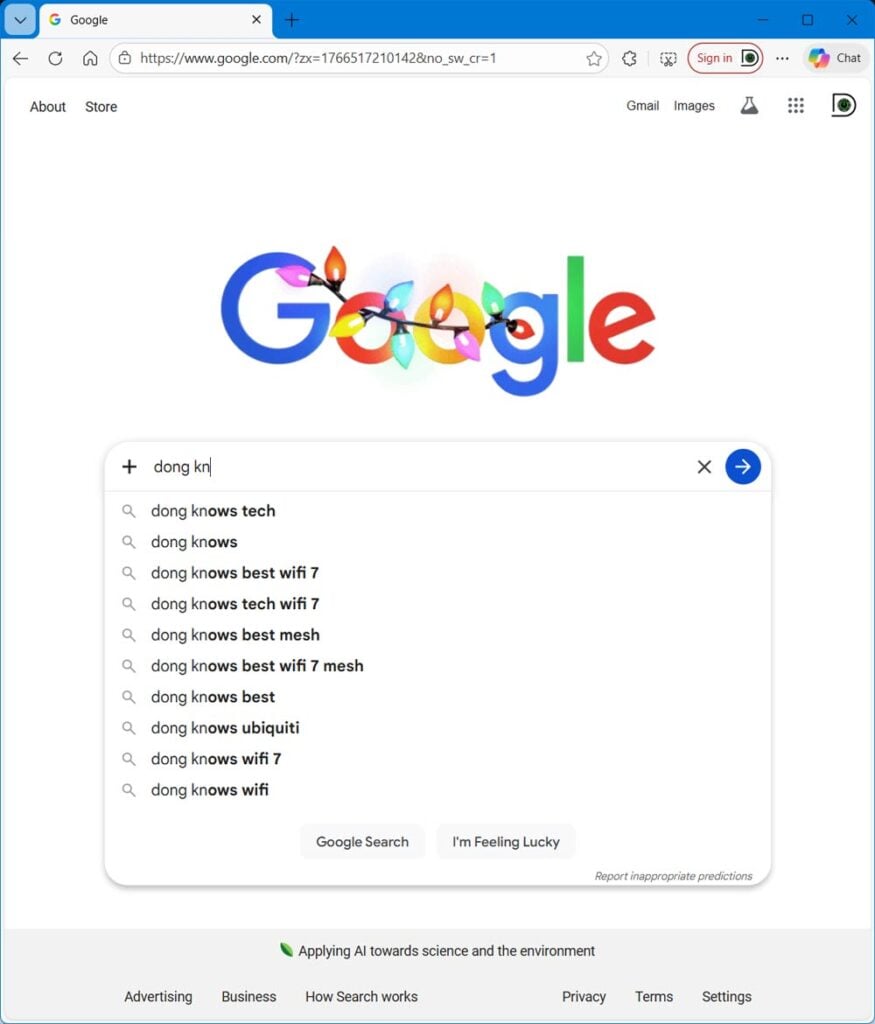

- Google predicts the next word as you type a search term.

- YouTube, Netflix, or social media automatically recommends new content based on your viewing habits or preferences.

- Any online transaction.

In any case, here’s the key to traditional AI: it generally manipulates existing data (information, content, etc.) to quickly produce the optimal result. In other words, it doesn’t produce anything new or original.

Then, it changes.

In recent years, AI has become super good at coding, which makes sense given that programming languages are generally rigid in terms of syntax, meaning, and structure (somewhat similar to chess). You can tell an AI what you want to achieve from a piece of software, and it’ll churn out bug-free lines of code in a fraction of the time a human programmer would need to physically type them into the text editor.

The result of this automated coding depends on the complexity of the application, but the point is that AI can now create something new and original. And that brings us to a new type of AI: Generative AI, a.k.a. GAI or GenAI.

Generative AI: Forming new and original future events

As the name suggests, this type of AI can generate original content on its own by “training” itself on specific subject(s) using existing information. GenAI incorporates traditional AI, and the gap between the two is often blurred, partly because the former is still in a very early stage.

ChatGPT, a product by OpenAI, is the first and most popular example of GenAI. And its name includes all that we’d need to know about this type of AI. Specifically:

- Chat is the form the engine uses to interface with users. It’s a chatbot powered by a large language model, enabling users to interact with it in natural language.

- G is for “generative”.

- P is for pre-trained, meaning the engine has been pre-trained by humans. We’ll get to this in a moment.

- T is for “transformers”. Specifically, it transforms human inputs, called “prompts,” into something new. More on “prompts” below.

To sum up: ChatGPT is a chatbot that uses pre-trained transformers to generate content from human prompts.

Other competing GenAI engines, such as Microsoft’s Copilot and Google’s Gemini, are also similar to chatbots in terms of user interfaces.

The way Generative AI works: Deep learning and its exciting novelties

Generative AI always requires an extensive network of many computers, housed in massive data centers, to form an entity often referred to as a “Neural Network.”

This Neural Network performs deep learning. Specifically, it first gathers extensive amounts of information, often by sending bots to scour the Internet in a controversial process akin to content theft.

Since mid-2024, Google’s AI Overview has destroyed many blogs.

But let’s just assume that everything is done appropriately. In this case, a GenAI model will become this super-intelligent entity that has all the answers in the field it’s trained in. And if it’s trained in all fields, it literally becomes God.

For example, you can imagine a well-trained GenAI in physics as an entity with the knowledge of Issac Newton and Albert Einstein combined, meaning it can give you all the answers to any problem in motion or quantum physics, available on demand. Now, all you need to do is query it correctly. But asking the right question can be very hard, especially when you want a solution to a complex matter.

For this reason, with GenAI, there’s a new required skill set called prompt engineering, which, in a nutshell, is the method for structuring or framing queries to a generative AI to produce the best output. You have to speak the AI language, so to speak. (Again, that’s because we don’t and never will understand what’s happening within the AI “box” since, among other things, we don’t use numbers as the only means to communicate.)

That said, in theory, if you’re well-trained in prompt engineering and working with a well-trained GenAI, your imagination would literally be the limit. For example, you can give the AI a movie script, and it will generate an entire movie at a fraction of the time compared with the traditional process of casting, acting, pre-production, post-production, and so on.

Alternatively, you can feed the AI with the information about a particular cancer cell, and it’ll come up with the method or medicine to cure that cancer, a process that, if possible, would take humans years, if not decades, to come up with.

The point is that GenAI can, in theory, solve anything, create anything, instantly. That’s the idea. To be clear, the current GenAI is still a long way from that—we don’t even know what that actually entails.

Here are a few real-life examples of what GenAI can do today:

- Self-driving: Waymo’s driverless taxi has been a reality for years, with Tesla‘s cars being in the proximity of automation (sometimes). In any case, this is a huge advancement from the cruise control feature.

- You can ask GenAI to write an entire article or even an essay. While these are often generic at best and plagiarism at worst, it’s a great leap from the original typo-checker.

- You can ask GenAI to draw a picture or make a quick video of a particular object. Depending on the prompts and inputs, the results are often better than passable, even enough to fool many humans, which is not exactly a good thing, as mentioned below.

Keep in mind that GenAI hasn’t always been great. The latest models often make “dumb” mistakes, spitting out false information or even nonsense. In any case, it’s fair to say GenAI is still in an early stage—ChatGPT was founded only slightly over three years ago. As a result, to some, its future seems nothing but bright.

Does it, though?

How AI can be overrated and dangerous

Like all things, there’s a flipside to AI. And this is where it gets scary, though not in the way you might have found out by watching the news.

Folks are often worried that AI might “take their job,” rendering them unemployable. And while that might be true and even has happened to a certain degree in the past decades, it’s not exactly a huge concern. As humanity progresses technologically over the centuries, each time certain types of jobs become obsolete, new and better opportunities arise.

For example, it took America only about a decade in the early 1900s to completely transform from horse-based transportation, which had lasted for centuries, to automobiles. Lots of jobs were lost during the period, only to be replaced by many more that were not only higher-paying but also less labor-intensive.

That said, the first adverse effect, and the actual cost, of AI is something you can’t see: the energy use.

The energy cost and the possible over-investment bubble

As mentioned, GenAI needs to continually gather information, train itself, and produce responses at scale. All that takes energy. And even when it’s not doing anything, like a computer, it still needs a lot of energy to be “on” and ready.

How much energy, you might wonder? This varies depending on the situation and the model’s size, but the short answer is: a lot!

According to MIT Technology Review, to respond to a small prompt such as “how long should I reheat pastas?” ChatGPT uses about 8 seconds’ worth of electricity to run the microwave itself. A larger query, such as generating a five-second video, uses much more energy, enough to run the appliance for over an hour.

And that adds up. ChatGPT, for example, reportedly receives some 2.5 billion prompts a day. To respond to them, OpenAI’s data centers use a massive amount of electricity. Aside from the cost of the resources, there’s also the carbon footprint, as data centers often use natural gas and coal to produce power. It’s estimated that in a year, with 2.5 billion queries a day, ChatGPT could emit the same amount of carbon as driving a gas car around the Earth 11,000 times.

And this type of consumption only increases. In terms of energy use, AI is no longer a technology, but its own sector, and everyone is paying for it through higher energy costs alone.

Additionally, over the past couple of years, it’s an understatement to say the world has been in an AI fervor, with billions and billions of dollars invested in it. For example, Nvidia stock, the company that makes the chips powering AI data centers, grew from around $15 per share in 2023 to over $190 per share by the end of 2025, a gain of well over 10x in a few years. Other tech giants, including Google, Microsoft, and Meta, have also invested tens of billions in AI and plan to pour hundreds of billions more in the coming years.

The investment has become so widespread that many ETFs and index funds now hold a portion of their portfolios in AI, potentially tying everyone’s savings and retirement plans to the growth (or downfall) of AI to various degrees.

And before this potential AI bubble bursts, many have already been victimized.

From deep learning to deep fake

As mentioned above, while GenAI is not yet at the level of “God,” it can, for now, generate content with impressive results, enough to fool many. Its deep learning has produced an unintended consequence: the deep fake.

If you don’t know what deep fake means, the video from Neil deGrasse Tyson above will explain it. The astrophysicist himself has been a victim of this practice.

And it gets much worse than just some silly videos. According to the Global Anti-Scam Alliance (GASA), AI has accelerated global scams, making them a trillion-dollar industry in 2024. The number for 2025 is not yet available, but it is expected to climb with no end in sight.

AI helps scammers be much more effective at social engineering, with elaborate “big butchering” schemes that start with seemingly innocuous social connection requests or random text messages. Almost everyone receives some of these days.

Once a connection is made, the scammers use elaborate fake messages, images, voicemail, and even video, depending on the situation, to gain the victims’ trust and, in the end, drain their entire life savings via various means, including fake crypto investments.

The “kill-all-humans” senario

The final scary thing about AI is that it can be dangerous. (And yes, I’m a fan of Bender.)

As mentioned above, the deep learning process requires human input to weed out dangerous or inappropriate outputs. But stuff can slip through the cracks. For example, offering guidance on murdering or committing suicide is clearly a big no-no for AI, but it has allegedly happened.

Additionally, there can be mistakes or deliberate sabotage in the system, which can be applied instantly on a large scale. Imagine if a fleet of self-driving cars were instructed to cause accidents or, god forbid, run over humans. By the time the system is shut down, there could have been carnage on the streets.

And finally, maybe in the far future, when AI becomes so advanced that it develops its own consciousness. All of a sudden, it now wants to “survive”. As a super-smart entity, it’s not that hard for it to figure out that humans—who have been busy imposing restrictions and constraints, not to mention the ability to “turn the computer off”—would be the only obstacle to its progress and survival. By then, no amount of skill in prompt engineering or fake cat videos could save us.

The takeaway

At its core, AI is a tool, and like any tool, it can be used for good or evil.

If history has taught us anything, doing bad or taking shortcuts is often easier with more immediate and satisfying results. That, plus some people’s attitude of making the most money now, no matter the cost for the rest—the greed—might inflict irreparable damage to our society. And so far, AI has accelerated that tendency.

Here’s a fact: currently, whatever benefits we get from GenAI are far from being worth the resources we put into it, not to mention the costs of its disbenefits, like sophisticated scams, laziness, or the fact that many have gradually lost their ability to think critically as they rely on it too much.

What makes AI scary is that, in the current direction of development, it has become an unpredictable and disruptive technology, with a risk of getting out of control. We haven’t been able to figure out precisely what it can do, what it really does within the “box”, or where it will take us.

So, economically, if AI is currently a bubble, the sooner it pops (or deflates), the less severe the consequences will be. And the chance of it not being a bubble is beyond my level of intelligence.

Thanks Dong, a very hype-less overview for laypersons on “ai”. Great for our parents or those non-techies in our family. I’ve sent it off to family in both camps just now.

Thanks for spreading the word, Brian.

Nearly all references to “AI” are absolute rubbish. “Programmed” is the word they are looking for. AI has become a marketing/clickbait term, with absolutely nothing to do with “intelligence”!

Agreed, Keith. It’s like “block chain” a few years back. But I was talking about the cases where it isn’t used as marketing, the actual development like ChatGPT, self-driving, etc.

“Programmed” by the way is a type of AI per my simple definition of “intelligence” above.

GenAI does change a lot of our way of work (I am an infra engineer in a saas company), there are many “agents” to assist on developing and troubleshooting, etc.

What concerns me is that I’ve seen more and more people pratically gave up on thinking, let along “critical thinking”. Sometimes they are just acting like an human agent to the GenAI and just copy/paste what GenAI just said. For far too many times, I had to spend a lot of time to read and review things that people didn’t even bother to write and just “outsource” the responsibility/accountability to others thinking someone else will do the fact check for them.

I seriously hope people can understanfd that productivity is not “producing tons of stuff” in a short period of time, but something actually usesful. I still have hope for what tech innovations will take us but maybe not in a hard way.

🤞

“It often makes “dumb” mistakes, spitting out false information or even nonsense, ”

I’m so glad you pointed this out and I don’t think it gets nearly enough attention. I wish people could understand that LLMs cannot discern fact from fiction. They are language models, not world models or reasoning models, and thus pump out crap that can sound convincing but may not be accurate at all. In fact I stopped using Google and Bing for search because I got sick of the Gemini/Copilot summaries which I knew I could never trust. One recent study showed that LLMs got news article summaries wrong 40% of the time! No amount of scaling or big data centers will prevent this hallucination problem either…it is an inherent limitation of LLMs and it’s extremely disappointing to see big tech go “all-in” on these things and almost feel like they’re trying to force them down our throats when they are so problematic in so many ways.

As mentioned, James, I’m on the “apprehension” team.

Great job explaining things in terms that non-technical people can understand!

Thanks, Mike!